Wednesday, December 4, 2019

Saturday, November 30, 2019

Learn Django Step by Step

Newer Django needs Python version > 3.4

to setup your machine to run django

1) set latest python 3.xx with Pip support

2) Install Pipenv (for more information about pipenv check https://realpython.com/pipenv-guide/)

$ mkdir project1

$ cd project1

$ pipenv --three install Django

6) run django project using

Steps Summary

Create project2 with name p2 contains one App module name main

mkdir project2

cd project2

pipenv --three install Django

pipenv shell

django-admin startproject p2 .

./manage.py migrate

./manage.py startapp main

6) Update INSTALL_APPS found on settings.py, Add NewApp we just create with name "main" to the list

7) update views.py file to define start page

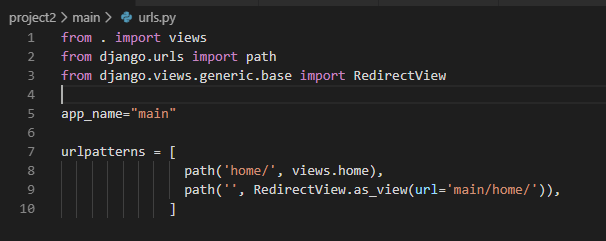

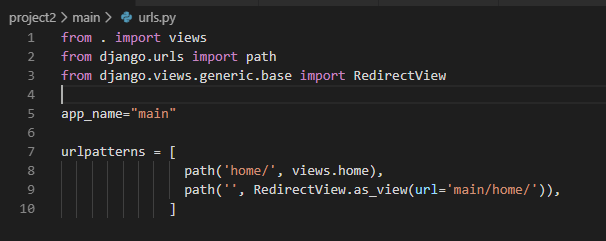

8) create main\urls.py file inside new app directory

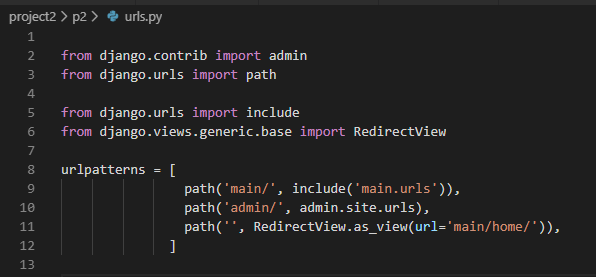

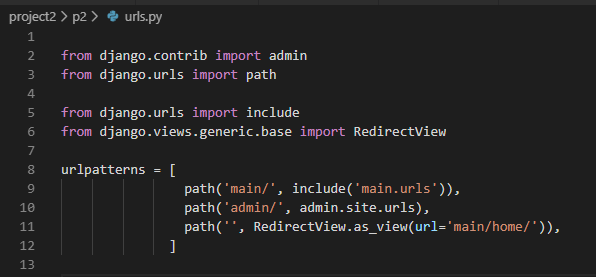

9) update original urls.py to include new app routes file (urls.py)

10) update main\models.py to define Database structure,

11) run the next commands to create DB tables

./manage.py makemigrations main

./manage.py migrate

How to Handle Static Files in Django using settings.py?

STATIC_ROOT/STATIC_URL handle system static files

during development all static folders inside any App we create will be accessible using static URL, but in run time we will write command ./manage.py collectstatic to copy all static files from all app folders and put them to STATIC_ROOT folder

_ROOT: means where these files are located on HD

_URL: means how you can call these files in the URL

add the next 2 line to settings.py

How to Handle Files uploaded at run time using settings.py?

MEDIA_ROOT/MEDIA_URL, handle files uploaded on runtime by users

_ROOT: means where these files are located on HD

_URL: means how you can call these files in the URL

MEDIA_URL = '/FileUpload/'

MEDIA_ROOT = os.path.join(BASE_DIR ,'static', 'FileUpload/')

What is MIDDLEWARE found on settings.py?

MIDDLEWARE = [

'django.middleware.security.SecurityMiddleware',

'django.contrib.sessions.middleware.SessionMiddleware',

'django.middleware.common.CommonMiddleware',

'django.middleware.csrf.CsrfViewMiddleware',

'django.contrib.auth.middleware.AuthenticationMiddleware',

'django.contrib.messages.middleware.MessageMiddleware',

'django.middleware.clickjacking.XFrameOptionsMiddleware',

]

for example allow template to access MEDIA_URL

'context_processors': [

'django.template.context_processors.debug',

'django.template.context_processors.request',

'django.contrib.auth.context_processors.auth',

'django.contrib.messages.context_processors.messages',

'django.template.context_processors.media', #to use {{ MEDIA_URL }} in templates

],

2) For mysql,you need to

a) install mysql drivers

b) run pipenv install mysqlclient

c) update setting.py

View Can be method or Class

urls.py

path('Messages1/', view.MyFormView.as_view(), name='MyFormView'),

path('Messages2/', view. myview, name='myview'),

View.py

def myview(request):

if request.method == "POST":

form = form_class(request.POST)

if form.is_valid():

return HttpResponseRedirect('/success/')

else:

form = MyForm(initial={'key': 'value'})

return render(request, 'form_template.html', {'form': form})

class MyFormView(View):

template_name = 'form_template.html'

def get(self, request):

return render(request, self.template_name, {'form': form})

def post(self, request):

form = form_class(request.POST)

if form.is_valid():

return HttpResponseRedirect('/success/')

return render(request, self.template_name, {'form': form})

Create a Login Screen

Create File Upload

Sample Complete Code

Model

Form

View

Template

HomeForm.html

newuser.html

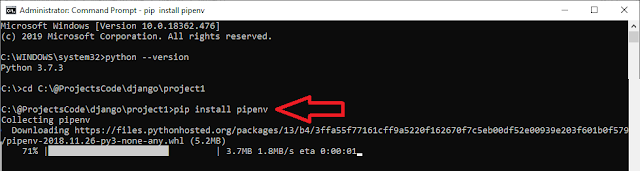

to setup your machine to run django

1) set latest python 3.xx with Pip support

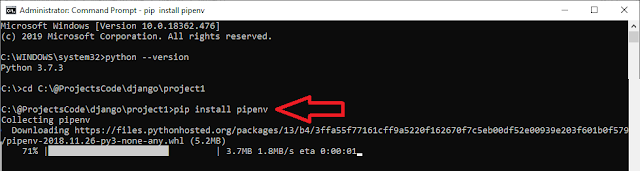

2) Install Pipenv (for more information about pipenv check https://realpython.com/pipenv-guide/)

pip install pipenv

Pipenv basic install command takes the form:3) Install latest django framework on current directory only

$ pipenv install [package names]

The user can provide these additional parameters:

--two— Performs the installation in a virtualenv using the systempython2link.

--three— Performs the installation in a virtualenv using the systempython3link.

--python— Performs the installation in a virtualenv using the provided Python interpreter.

$ mkdir project1

$ cd project1

$ pipenv --three install Django

4) Activate virtual env

pipenv shell

5) Create new project with name "booktime " on the current folder

django-admin startproject p1 .

At this point we should have an initial folder structure (in the current

folder) that looks like this:

-

manage.py: The command-line utility that allows you to

interact with the Django project. You will use this very

frequently throughout the book.

-

p1: A Folder that contains the files

every Django project needs, which are

-

./__init__.py: This is an empty file that is

only needed to make the other files importable.

-

./settings.py: This file contains all the

configuration of our project, and can be customized

at will.

-

./urls.py: This file contains all the URL

mappings to Python functions. Any URL that needs

to be handled by the project must have an entry

here.

-

./wsgi.py: This is the entry point that will

be used when deploying our site to production.

-

./__init__.py: This is an empty file that is

only needed to make the other files importable.

-

Pipfile: The list of Python libraries the project is using.

At this point it is only Django.

-

Pipfile.lock: The internal Pipenv file.

-

$ ./manage.py runserver

7) to configure django default Database tables

8) django by default creates admin site /admin , and you can set admin password using

$ ./manage.py migrate

8) django by default creates admin site /admin , and you can set admin password using

$ ./manage.py createsuperuser

9) Django Projects vs. Apps:

we just create an empty project, but this project will contains many modules each module call App, and to create a new app with name "main" inside the project run command

we just create an empty project, but this project will contains many modules each module call App, and to create a new app with name "main" inside the project run command

$ ./manage.py startapp main

this will create a new folder with name main contains these items

main/:

__init__.py

admin.py

apps.py

models.py

views.py

tests.py

migrations

Steps Summary

Create project2 with name p2 contains one App module name main

mkdir project2

cd project2

pipenv --three install Django

pipenv shell

django-admin startproject p2 .

./manage.py migrate

./manage.py startapp main

Start work with new modules (main in our case)

To start work with new module, you should create ( inside module folder)

1) templates directory : this folder will contains all HTML files

2) templates\main : create 'main' directory inside templates folder (main is the NewApp we just created)

3) static directory: this folder will contains JS and Images and any other static contents

4) create HTML start page "\main\templates\home.html"

5) add {% load static %} on the beginning of HTML page and call static resources located in static directory like this {% static 'images/logo.png' %}

6) Update INSTALL_APPS found on settings.py, Add NewApp we just create with name "main" to the list

INSTALLED_APPS = [

'django.contrib.admin',

'django.contrib.auth',

'django.contrib.contenttypes',

'django.contrib.sessions',

'django.contrib.messages',

'django.contrib.staticfiles',

'main.apps.MainConfig',

]

Notes: if the NewApp name = "draw", the name in INSTALL_APPS will be 'draw.apps.DrawConfig'

8) create main\urls.py file inside new app directory

10) update main\models.py to define Database structure,

11) run the next commands to create DB tables

./manage.py makemigrations main

./manage.py migrate

How to Handle Static Files in Django using settings.py?

STATIC_ROOT/STATIC_URL handle system static files

during development all static folders inside any App we create will be accessible using static URL, but in run time we will write command ./manage.py collectstatic to copy all static files from all app folders and put them to STATIC_ROOT folder

_ROOT: means where these files are located on HD

_URL: means how you can call these files in the URL

add the next 2 line to settings.py

STATIC_URL = '/static/'

STATIC_ROOT = os.path.join(BASE_DIR, 'static/')

STATIC_ROOT = os.path.join(BASE_DIR, 'static/')

How to Handle Files uploaded at run time using settings.py?

MEDIA_ROOT/MEDIA_URL, handle files uploaded on runtime by users

_ROOT: means where these files are located on HD

_URL: means how you can call these files in the URL

MEDIA_URL = '/FileUpload/'

MEDIA_ROOT = os.path.join(BASE_DIR ,'static', 'FileUpload/')

What is MIDDLEWARE found on settings.py?

help to inject code that will be executed at specific points of the HTTP request/response cycle.

by default we have the next middlewareMIDDLEWARE = [

'django.middleware.security.SecurityMiddleware',

'django.contrib.sessions.middleware.SessionMiddleware',

'django.middleware.common.CommonMiddleware',

'django.middleware.csrf.CsrfViewMiddleware',

'django.contrib.auth.middleware.AuthenticationMiddleware',

'django.contrib.messages.middleware.MessageMiddleware',

'django.middleware.clickjacking.XFrameOptionsMiddleware',

]

What is context_processors inside TEMPLATES in settings.py?

Context processors are a way to inject additional variables in the scope of templates. By doing so, you would not have to do it in every view that requires these variables.

for example allow template to access MEDIA_URL

'django.template.context_processors.debug',

'django.template.context_processors.request',

'django.contrib.auth.context_processors.auth',

'django.contrib.messages.context_processors.messages',

'django.template.context_processors.media', #to use {{ MEDIA_URL }} in templates

],

Configure Django default DB using settings.py?

1) For postgresql,you need to

a) install the PostgreSQL drivers

b) run pipenv install psycopg2

c) update setting.py

1) For postgresql,you need to

a) install the PostgreSQL drivers

b) run pipenv install psycopg2

c) update setting.py

DATABASES = { 'default': { 'NAME': 'app_data', 'ENGINE': 'django.db.backends.postgresql', 'USER': 'postgres_user', 'PASSWORD': 'xxxx' } }

2) For mysql,you need to

a) install mysql drivers

b) run pipenv install mysqlclient

c) update setting.py

DATABASES = { 'default': { 'NAME': 'user_data', 'ENGINE': 'django.db.backends.mysql', 'USER': 'mysql_user', 'PASSWORD': 'xxxx', 'HOST': '127.0.0.1', 'PORT': '3306', 'charset':'utf8', 'use_unicode':'True'

} }

View Can be method or Class

urls.py

path('Messages1/', view.MyFormView.as_view(), name='MyFormView'),

path('Messages2/', view. myview, name='myview'),

View.py

def myview(request):

if request.method == "POST":

form = form_class(request.POST)

if form.is_valid():

return HttpResponseRedirect('/success/')

else:

form = MyForm(initial={'key': 'value'})

return render(request, 'form_template.html', {'form': form})

class MyFormView(View):

template_name = 'form_template.html'

def get(self, request):

return render(request, self.template_name, {'form': form})

def post(self, request):

form = form_class(request.POST)

if form.is_valid():

return HttpResponseRedirect('/success/')

return render(request, self.template_name, {'form': form})

Create a Login Screen

Create File Upload

Sample Complete Code

Model

class User(models.Model):

username = models.CharField(max_length=100, blank=True)

email = models.CharField(max_length=100, blank=True)

password = models.CharField(max_length=100, blank=True)

class Profile(models.Model):

user = models.OneToOneField(User, on_delete=models.CASCADE)

company = models.CharField(max_length=30, blank=True)

company_site = models.URLField(blank=True)

profile_pic = models.ImageField(upload_to='profile_pics',blank=True)

Form

class ProfileForm(forms.ModelForm):

class Meta:

model = Profile

fields = ['company', 'company_site', 'profile_pic']

class RegisterForm(forms.ModelForm):

username = forms.CharField(max_length=100,label='User Name',

widget=forms.TextInput(

attrs={

"class":"form-control",

"placeholder":"Your Username"

}

)

)

email = forms.EmailField(max_length=100)

password = forms.CharField(widget=forms.PasswordInput)

class Meta:

model = User

fields = ['username', 'email', 'password']

def clean_username(self):

username=self.cleaned_data.get("username")

qs=User.objects.filter(username=username)

if qs.exists():

raise forms.ValidationError("Username is taken")

return username

def clean_email(self):

email=self.cleaned_data.get("email")

qs=User.objects.filter(email=email)

if qs.exists():

raise forms.ValidationError("email is taken")

return email

View

@transaction.atomic

def NewUser(request):

if request.method == 'POST':

user_form = RegisterForm(request.POST or None)

profile_form = ProfileForm(request.POST or None )

if user_form.is_valid() and profile_form.is_valid():

user = user_form.save()

user.set_password(user.password)

user.save()

profile = profile_form.save(commit=False)

profile.user = user

if 'profile_pic' in request.FILES:

print('found it')

profile.profile_pic = request.FILES['profile_pic']

profile.save()

messages.success(request, 'Your profile was successfully updated!')

return redirect('z3950:newuser')

else:

messages.error(request, 'Please correct the error below.')

else:

user_form = RegisterForm() #instance=request.user

profile_form = ProfileForm() #instance=request.user.profile

return render(request, 'z3950/newuser.html', {

'user_form': user_form,

'profile_form': profile_form

})

Template

HomeForm.html

<html>

<head>

<title>

{% block title %}

Edit My Profile

{% endblock%}

</title>

</head>

<Body>

{% if messages %}

<div class="alert alert-secondary" role="alert">

{% for message in messages %}

{{ message }}

{% endfor %}

</div>

{% endif %}

{% block Contents %}

{% endblock %}

</body>

</html>

newuser.html

{% extends 'HomeForm.html' %}

{% block title %}

Edit My Profile

{% endblock%}

{% block Contents %}

{% if user_form.errors or profile_form.errors %}

<div class="alert alert-danger" role="alert">

{{user_form.errors}}

{{profile_form.errors}}

</div>

{% endif %}

<div class="row">

<div class="col-lg-12">

<div class="card">

<div class="card-header d-flex align-items-center">

<h4>Edit My User Info.</h4>

</div>

<div class="card-body">

<form class="form-horizontal" method="POST" action="" enctype="multipart/form-data">

{% csrf_token%}

{%for field in user_form %}

<div class="form-group row">

<label class="col-sm-2 form-control-label">{{field.label_tag}}</label>

<div class="col-sm-10">

<div class="input-group">

{{field}}

<div class="invalid-feedback">{{field.errors}}</div>

</div>

</div>

</div>

{%endfor%}

{%for field in profile_form %}

<div class="form-group row">

<label class="col-sm-2 form-control-label">{{field.label_tag}}</label>

<div class="col-sm-10">

<div class="input-group">

{{field}}

<div class="invalid-feedback">{{field.errors}}</div>

</div>

</div>

</div>

{%endfor%}

<div class="form-group row">

<div class="col-sm-4 offset-sm-2">

<button type="submit" class="btn btn-primary">Submit</button>

<button type="reset" class="btn btn-secondary">Reset</button>

</div>

</div>

</form>

</div>

</div>

</div>

</div>

{% endblock %}

Tuesday, November 26, 2019

Add Azure Active Directory User to Local Group

How do I add Azure Active Directory User to Local db2admins Group?

With Windows 10 you can join an organisation (=Azure Active Directory) and login with your cloud credentials.

Open a command prompt as Administrator and using the command line, and run command

net localgroup db2admins AzureAD\MOHAMEDRAFIE /add

Notes:

With Windows 10 you can join an organisation (=Azure Active Directory) and login with your cloud credentials.

Open a command prompt as Administrator and using the command line, and run command

net localgroup db2admins AzureAD\MOHAMEDRAFIE /add

Notes:

You cannot use the domain user ID to run the db2cmd command to create a new database and tables. If you do, you might see this error in the DB2 log files:

DB2 cannot look up the domain user ID "USERID" as an authorization ID. It ignores the local group for the domain user ID. Even if you add the domain user ID to the local DB2ADMNS group, DB2 does not have the authority to perform database operations.SQL1092N "USERID does not have the authority to perform the requested command or operation."

Resolving the problem

To enable the domain user ID to access the database, complete the following steps.

- Add the domain user ID to the local group DB2ADMNS.

- Open the DB2 command window and run the following commands from the prompt:

db2set DB2_GRP_LOOKUP=LOCAL,TOKENLOCAL db2 update dbm cfg using sysadm_group DB2ADMNS db2stop db2start

Tuesday, November 19, 2019

OpenCV

The latest OpenCV 3.4.3 (open source computer vision framework) work with Python 3.7.

OpenCV supports the C/ C++, Python, and Java languages, and it can be used to build computer vision applications for desktop and mobile operating systems alike, including Windows, Linux, macOS, Android, and iOS.

OpenCV started at Intel Research Lab during an initiative to advance approaches for building CPU-intensive applications.

How to Get OpenCV works for Python

Install Python 3.7 x64

then

pip install "numpy-1.14.6+mkl-cp37-cp37m-win_ amd64.whl"

pip install "opencv_python-3.4.3+contrib-cp37- cp37m-win_amd64.whl"

Validate OpenCV installation by run import command

>import cv2

Install OpenCV on MAC

brew install python

pip install numpy

brew install opencv --with-tbb --with-opengl

OpenCV consists of two types of modules:

- Main modules: Provide the core functionalities such as image processing tasks, filtering, transformation, and others.

- Extra modules: These modules do not come by default with the OpenCV distribution. These modules are related to additional computer vision functionalities such as text recognition.

List of Open Main Modules

core Includes all core OpenCV functionalities such as basic structures, Mat classes, and so on.

imgproc Includes image-processing features such as transformations, manipulations, filtering, and so on.

Imgcodecs Includes functions for reading and writing images.

videoio Includes functions for reading and writing videos.

highgui Includes functions for GUI creation to visualize results.

video Includes video analysis functions such as motion detection and tracking, the Kalman filter, and the infamous CAM Shift algorithm (used for object tracking).

calib3d Includes calibration and 3D reconstruction functions that are used for the estimation of transformation between two images.

features2d Includes functions for keypoint-detection and descriptor-extraction algorithms that are used in object detection and categorization algorithms.

objdetect Supports object detection.

dnn Used for object detection and classification purposes, among others. The dnn module is relatively new in the list of main modules and has support for deep learning.

ml Includes functions for classification and regression and covers most of the machine learning capabilities.

flann Supports optimized algorithms that deal with the nearest neighbor search of high-dimensional features in large data sets. FLANN stands for Fast Library for Approximate Nearest Neighbors (FLANN).

photo Includes functions for photography-related computer vision such as removing noise, creating HD images, and so on.

stitching Includes functions for image stitching that further uses concepts such as rotation estimation and image warping.

shape Includes functions that deal with shape transformation, matching, and distance-related topics.

superres Includes algorithms that handle resolution and enhancement.

videostab Includes algorithms used for video stabilization.

viz Display widgets in a 3D visualization window

OpenCV Sample Code

Task 1 : Read image convert it to gray, show two images, and save gray scale image to HD

import cv2

Original_image = cv2.imread("./images/panda.jpg")

gray_image = cv2.cvtColor(Original_image, cv2.COLOR_BGR2GRAY)

cv2.imshow("Gray panda", gray_image)

cv2.imshow("Color panda", gray_image)

cv2.imwrite("gray_panda", gray_image)

cv2.waitKey(0)

cv2.destroyAllWindows()

Task 2 : Open user camera and read image by image and show on screen, exit when user press esc

import cv2

cap = cv2.VideoCapture(0)

while True:

ret, frame = cap.read()

cv2.imshow("frame", frame)

key = cv2.waitKey(1)

if key == 27:

break

cap.release()

cv2.destroyAllWindows()

Task 3: Open Video Stream and show image by image until user press esc

import cv2

mountains_video = cv2.VideoCapture("mountains.mp4")

while True:

ret, frame = mountains_video.read()

cv2.imshow("frame", frame)

key = cv2.waitKey(25)

if key == 27:

break

mountains_video.release()

Task 4: Save Camera Stream after flip to HD and flip images show image by image until user press q

import numpy

import cv2

cap = cv2.VideoCapture(0)

# Define the codec and create VideoWriter object

fourcc = cv2.VideoWriter_fourcc(*'XVID')

out = cv2.VideoWriter('output.avi',fourcc, 20.0, (640,480))

while(cap.isOpened()):

ret, frame = cap.read()

if ret==True:

frame = cv2.flip(frame,0)

# write the flipped frame

out.write(frame)

cv2.imshow('frame',frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

else:

break

cap.release()

out.release()

cv2.destroyAllWindows()

Notes

OpenCV does not provide any way to train a DNN. However, you can train a DNN model using frameworks like Tensorflow, MxNet, Caffe etc, and import it into OpenCV for your application.

OpenVINO is specifically designed to speed up networks used in visual tasks like image classification and object detection.

When we think of AI, we usually think about companies like IBM, Google, Facebook.. etc.

Well, they are indeed leading the way in algorithms but AI is computationally expensive during training as well as inference.

Therefore, it is equally important to understand the role of hardware companies in the rise of AI.

NVIDIA provides the best GPUs as well as the best software support using CUDA and cuDNN for Deep Learning.

NVIDIA pretty much owns the market for Deep Learning when it comes to training a neural network.

However, GPUs are expensive and not always necessary for inference (inference means use trained model on production).

In fact, most of the inference in the world is done on CPUs!

In the inference space, Intel is a big player, it manufactures Vision Processing Units (VPUs), integrated GPUs, and FPGAs — all of which can be used for inference.

and to avoid confusing developers about how to write code to optimize the use of HW, Intel provides us OpenVINO framework

OpenVINO enables CNN-based deep learning inference on the edge, supports heterogeneous execution across computer vision accelerators, speeds time to market via a library of functions and pre-optimized kernels and includes optimized calls for OpenCV and OpenVX.

How to use OpenVINO?

1) OpenCV or OpenVINO does not provide you tools to train a neural network. So, train your model using Tensorflow or pytorch.

2) The model obtained in the previous step is usually not optimized for performance.

OpenVINO requires us to create an optimized model which they call Intermediate Representation (IR) using a Model Optimizer tool they provide.

The result of the optimization process is an IR model. The model is split into two files

model.xml : This XML file contains the network architecture.

model.bin : This binary file contains the weights and biases.

3) OpenVINO Inference Engine plugin : OpenVINO optimizes running this model on specific hardware through the Inference Engine plugin

OpenCV supports the C/ C++, Python, and Java languages, and it can be used to build computer vision applications for desktop and mobile operating systems alike, including Windows, Linux, macOS, Android, and iOS.

OpenCV started at Intel Research Lab during an initiative to advance approaches for building CPU-intensive applications.

How to Get OpenCV works for Python

Install Python 3.7 x64

then

pip install "numpy-1.14.6+mkl-cp37-cp37m-win_ amd64.whl"

pip install "opencv_python-3.4.3+contrib-cp37- cp37m-win_amd64.whl"

Validate OpenCV installation by run import command

>import cv2

Install OpenCV on MAC

brew install python

pip install numpy

brew install opencv --with-tbb --with-opengl

OpenCV consists of two types of modules:

- Main modules: Provide the core functionalities such as image processing tasks, filtering, transformation, and others.

- Extra modules: These modules do not come by default with the OpenCV distribution. These modules are related to additional computer vision functionalities such as text recognition.

List of Open Main Modules

core Includes all core OpenCV functionalities such as basic structures, Mat classes, and so on.

imgproc Includes image-processing features such as transformations, manipulations, filtering, and so on.

Imgcodecs Includes functions for reading and writing images.

videoio Includes functions for reading and writing videos.

highgui Includes functions for GUI creation to visualize results.

video Includes video analysis functions such as motion detection and tracking, the Kalman filter, and the infamous CAM Shift algorithm (used for object tracking).

calib3d Includes calibration and 3D reconstruction functions that are used for the estimation of transformation between two images.

features2d Includes functions for keypoint-detection and descriptor-extraction algorithms that are used in object detection and categorization algorithms.

objdetect Supports object detection.

dnn Used for object detection and classification purposes, among others. The dnn module is relatively new in the list of main modules and has support for deep learning.

ml Includes functions for classification and regression and covers most of the machine learning capabilities.

flann Supports optimized algorithms that deal with the nearest neighbor search of high-dimensional features in large data sets. FLANN stands for Fast Library for Approximate Nearest Neighbors (FLANN).

photo Includes functions for photography-related computer vision such as removing noise, creating HD images, and so on.

stitching Includes functions for image stitching that further uses concepts such as rotation estimation and image warping.

shape Includes functions that deal with shape transformation, matching, and distance-related topics.

superres Includes algorithms that handle resolution and enhancement.

videostab Includes algorithms used for video stabilization.

viz Display widgets in a 3D visualization window

OpenCV Sample Code

Task 1 : Read image convert it to gray, show two images, and save gray scale image to HD

import cv2

Original_image = cv2.imread("./images/panda.jpg")

gray_image = cv2.cvtColor(Original_image, cv2.COLOR_BGR2GRAY)

cv2.imshow("Gray panda", gray_image)

cv2.imshow("Color panda", gray_image)

cv2.imwrite("gray_panda", gray_image)

cv2.waitKey(0)

cv2.destroyAllWindows()

Task 2 : Open user camera and read image by image and show on screen, exit when user press esc

import cv2

cap = cv2.VideoCapture(0)

while True:

ret, frame = cap.read()

cv2.imshow("frame", frame)

key = cv2.waitKey(1)

if key == 27:

break

cap.release()

cv2.destroyAllWindows()

Task 3: Open Video Stream and show image by image until user press esc

import cv2

mountains_video = cv2.VideoCapture("mountains.mp4")

while True:

ret, frame = mountains_video.read()

cv2.imshow("frame", frame)

key = cv2.waitKey(25)

if key == 27:

break

mountains_video.release()

Task 4: Save Camera Stream after flip to HD and flip images show image by image until user press q

import numpy

import cv2

cap = cv2.VideoCapture(0)

# Define the codec and create VideoWriter object

fourcc = cv2.VideoWriter_fourcc(*'XVID')

out = cv2.VideoWriter('output.avi',fourcc, 20.0, (640,480))

while(cap.isOpened()):

ret, frame = cap.read()

if ret==True:

frame = cv2.flip(frame,0)

# write the flipped frame

out.write(frame)

cv2.imshow('frame',frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

else:

break

cap.release()

out.release()

cv2.destroyAllWindows()

Notes

OpenCV does not provide any way to train a DNN. However, you can train a DNN model using frameworks like Tensorflow, MxNet, Caffe etc, and import it into OpenCV for your application.

OpenVINO is specifically designed to speed up networks used in visual tasks like image classification and object detection.

When we think of AI, we usually think about companies like IBM, Google, Facebook.. etc.

Well, they are indeed leading the way in algorithms but AI is computationally expensive during training as well as inference.

Therefore, it is equally important to understand the role of hardware companies in the rise of AI.

NVIDIA provides the best GPUs as well as the best software support using CUDA and cuDNN for Deep Learning.

NVIDIA pretty much owns the market for Deep Learning when it comes to training a neural network.

However, GPUs are expensive and not always necessary for inference (inference means use trained model on production).

In fact, most of the inference in the world is done on CPUs!

In the inference space, Intel is a big player, it manufactures Vision Processing Units (VPUs), integrated GPUs, and FPGAs — all of which can be used for inference.

and to avoid confusing developers about how to write code to optimize the use of HW, Intel provides us OpenVINO framework

OpenVINO enables CNN-based deep learning inference on the edge, supports heterogeneous execution across computer vision accelerators, speeds time to market via a library of functions and pre-optimized kernels and includes optimized calls for OpenCV and OpenVX.

How to use OpenVINO?

1) OpenCV or OpenVINO does not provide you tools to train a neural network. So, train your model using Tensorflow or pytorch.

2) The model obtained in the previous step is usually not optimized for performance.

OpenVINO requires us to create an optimized model which they call Intermediate Representation (IR) using a Model Optimizer tool they provide.

The result of the optimization process is an IR model. The model is split into two files

model.xml : This XML file contains the network architecture.

model.bin : This binary file contains the weights and biases.

3) OpenVINO Inference Engine plugin : OpenVINO optimizes running this model on specific hardware through the Inference Engine plugin

Tuesday, November 5, 2019

MAC shortcuts and Apps tips for windows users

Home Control A

End Control E

Copy Command X

Cut Command X

Paste Command V

Select All Command A

Page Up Fn Up Arrow

Page Down Fn Down Arrow

- Fn–Up Arrow: Page Up: Scroll up one page.

- Fn–Down Arrow: Page Down: Scroll down one page.

- Fn–Left Arrow: Home: Scroll to the beginning of a document.

- Fn–Right Arrow: End: Scroll to the end of a document.

- Command–Up Arrow: Move the insertion point to the beginning of the document.

- Command–Down Arrow: Move the insertion point to the end of the document.

- Command–Left Arrow: Move the insertion point to the beginning of the current line.

- Command–Right Arrow: Move the insertion point to the end of the current line.

- Option–Left Arrow: Move the insertion point to the beginning of the previous word.

- Option–Right Arrow: Move the insertion point to the end of the next word.

For complete list of the shortcut visit

https://support.apple.com/en-us/HT201236

Apps

Download manager

Screen Shots

NotePad

SSH Console

Monday, November 4, 2019

Install Solr 8.2 based on Java 11

sudo apt install -y default-jdk

wget http://www-eu.apache.org/dist/lucene/solr/8.2.0/solr-8.2.0.tgz

tar xzf solr-8.2.0.tgz solr-8.2.0/bin/install_solr_service.sh --strip-components=2

sudo bash ./install_solr_service.sh solr-8.2.0.tgz

Now the Solr RUN on your Server, and you can check it using

http://127.0.0.1:8983/solr/#/

Start, Stop and check the status of Solr service

sudo systemctl stop solr

sudo systemctl start solr

sudo systemctl status solr

To create new Search Core

sudo su - solr -c "/opt/solr/bin/solr create -c mycol1 -n data_driven_schema_configs"

Saturday, November 2, 2019

Install DLib machine learning library

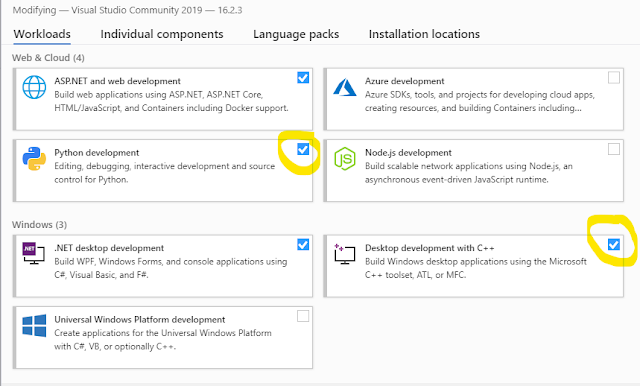

Download Visual Studio 2019 Community Edition from

https://visualstudio.microsoft.com/vs/

and choose "Python development" and "Desktop development with C++"

Then run

pip install dlib

OR

download dlib source code and run the command inside its folder

python setup.py install

OR

install miniconda, then run the next command

conda install -c conda-forge dlib

Thursday, October 31, 2019

Send SMS from Python

1) Create a trail account on https://www.twilio.com/try-twilio

2) Install the Twilio Python client library:

pip3 install twilio

3) Send SMS

Complete Python Code

2) Install the Twilio Python client library:

pip3 install twilio

3) Send SMS

Complete Python Code

from twilio.rest import Client

# Twilio account details

twilio_account_sid = 'Your Twilio SID here'

twilio_auth_token = 'Your Twilio Auth Token here'

twilio_source_phone_number = 'Your Twilio phone number here'

# Create a Twilio client object instance

client = Client(twilio_account_sid, twilio_auth_token)

# Send an SMS

message = client.messages.create(

body="This is my SMS message!",

from_=twilio_source_phone_number,

to="Destination phone number here"

)

Monday, October 14, 2019

Solr Field Attributes

Compare querying in Solr with querying in SQL

databases, the mapping is as follows.

SQL query:

select album,title,artist

from hellosolr

where album in ["solr","search","engine"] order by album DESC limit 20,10;

Solr query:

$ curl http://localhost:8983/solr/hellosolr/select?q=solr search engine &fl=album,title,artist&start=20&rows=10&sort=album desc

If you want to search a field for multiple tokens, you need to surround it with parentheses:

q=title:(to kill a mockingbird)&df=album

q=title:(buffalo OR soldier) OR artist:(bob OR marley)

Query Operators

The following are operators supported by query parsers:

OR: Union is performed and a document will match if any of the clause is satisfied.

AND: Association is performed and a document will match only if both the clauses are satisfied.

NOT: Operator to exclude documents containing the clause.

+/-: Operators to mandate the occurrence of terms. + ensures that documents containing the token must exist, and - ensures that documents containing the token must not exist.

Phrase Query ""

exact search

q="bob marley"

Proximity Query ~ INT

A proximity query requires the phrase query to be followed by the tilde (~) operator and a numeric distance for identifying the terms in proximity.

q="jamaican singer"~3

Wildcard Query ? *

You can specify the wildcard character ?, which matches exactly one character, or *, which matches zero or more characters.

q=title:(bob* OR mar?ey) OR album:(*bird)

Range Query [] {}

q=price:[1000 TO 5000] // 1000 <= price <= 5000

q=price:{1000 TO 5000} // 1000 < price > 5000

q=price:[1000 TO 5000} // 1000 <= price > 5000

q=price:[1000 TO *] // 1000 <= price

Check if field exists or not exists

for field exists

SQL query:

select album,title,artist

from hellosolr

where album in ["solr","search","engine"] order by album DESC limit 20,10;

Solr query:

$ curl http://localhost:8983/solr/hellosolr/select?q=solr search engine &fl=album,title,artist&start=20&rows=10&sort=album desc

If you want to search a field for multiple tokens, you need to surround it with parentheses:

q=title:(to kill a mockingbird)&df=album

q=title:(buffalo OR soldier) OR artist:(bob OR marley)

Query Operators

The following are operators supported by query parsers:

OR: Union is performed and a document will match if any of the clause is satisfied.

AND: Association is performed and a document will match only if both the clauses are satisfied.

NOT: Operator to exclude documents containing the clause.

+/-: Operators to mandate the occurrence of terms. + ensures that documents containing the token must exist, and - ensures that documents containing the token must not exist.

Phrase Query ""

exact search

q="bob marley"

Proximity Query ~ INT

A proximity query requires the phrase query to be followed by the tilde (~) operator and a numeric distance for identifying the terms in proximity.

q="jamaican singer"~3

Wildcard Query ? *

You can specify the wildcard character ?, which matches exactly one character, or *, which matches zero or more characters.

q=title:(bob* OR mar?ey) OR album:(*bird)

Range Query [] {}

q=price:[1000 TO 5000] // 1000 <= price <= 5000

q=price:{1000 TO 5000} // 1000 < price > 5000

q=price:[1000 TO 5000} // 1000 <= price > 5000

q=price:[1000 TO *] // 1000 <= price

Check if field exists or not exists

for field exists

field:[* TO *]

for field not exists

for field not exists

q=*:* -Tag_100_is:[* TO *]

Filter Query fq

Before apply any search filter all documents and select only the documents that has language=english and genre=rock

If multiple fq parameters are specified, the query parser will select the subset of documents that matches all the fq queries.

q=singer(bob marley) title:(redemption song)&fq=language:english&fq=genre:rock

q=product:hotel&fq=city:"las vegas" AND category:travel

rows= & start=

use start and rows together to get paginated search results.

sort

comma-separated list of fields on which the result should be sorted. The field name should be followed by the asc or desc keyword

sort=score desc,popularity desc

fl

Specifies the comma-separated list of fields to be displayed in the response.

wt

Specifies the format in which the response should be returned, such as JSON, XML, or CSV.

debugQuery

This Boolean parameter works wonders to analyze how the query is parsed and how a document got its score. Debug operations are costly and should not be enabled on live production queries. This parameter supports only XML and JSON response format currently.

explainOther

explainOther is quite useful for analyzing documents that are not part of a debug explanation. debugQuery explains the score of documents that are part of the result set (if you specify rows=10, debugQuery will add an explanation for only those 10 documents). If you want an explanation for additional documents, you can specify a Lucene query in the explainOther parameter for identifying those additional documents. Remember, the explainOther query will select the additional document to explain, but the explanation will be with respect to the main query.

Solr Schema save inside "managed-schema" file

Sample File Content

<schema name="default-config" version="1.6">

<field name="id" type="string" indexed="true" stored="true" required="true" multiValued="false" />

<field name="_version_" type="plong" indexed="false" stored="false"/>

<field name="_root_" type="string" indexed="true" stored="false" docValues="false" />

<field name="_text_" type="text_general" indexed="true" stored="false" multiValued="true"/>

</schema>

Explain Solr Field Attributes

name : each field should has a name

type : each field should has a type

indexed: whether it will be searchable or not

stored : whether it will be visible and user get field value or not

multiValued: where if the value is a single value or array of values

default: set default value if field is missing

sortMissingLast: order missing at the end

sortMissingFirst: order missing at the begin

required: Setting this attribute as true specifies a field as mandatory.

docValues: true means create a forward index for the field. Notes: inverted index is not efficient for sorting, faceting, and highlighting, and this approach promises to make it faster and also free up the fieldCache.

omitNorms Fields have norms associated with them, which holds additional information such as index-time boost and length normalization. Specifying omitNorms="true" discards this information, saving some memory. Length normalization allows Solr to give lower weight to longer fields. If a length norm is not important in your ranking algorithm (such as metadata fields) and you are not providing an index-time boost, you can set omitNorms="true". By default, Solr disables norms for primitive fields.

The following are possible combinations of indexed and stored parameters:

indexed="true" & stored="true":

When you are interested in both querying and displaying the value of a field.

indexed="true" & stored="false":

When you want to query on a field but don’t need its value to be displayed. For example, you may want to only query on the extracted metadata but display the source field from which it was extracted.

indexed="false" & stored="true":

If you are never going to query on a field and only display its value.

Define New Solr Type that support Arabic

<field name="subjects" type="text_ar_sort" indexed="true" stored="true"/>

<fieldType name="text_ar_sort" class="solr.SortableTextField" sortMissingLast="true" docValues="true" positionIncrementGap="100" multiValued="false">

<analyzer type="index">

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.StopFilterFactory" words="lang/stopwords_ar.txt" ignoreCase="true"/>

<filter class="solr.ArabicNormalizationFilterFactory"/>

<filter class="solr.ArabicStemFilterFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

</analyzer>

<analyzer type="query">

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.StopFilterFactory" words="lang/stopwords_ar.txt" ignoreCase="true"/>

<filter class="solr.ArabicNormalizationFilterFactory"/>

<filter class="solr.ArabicStemFilterFactory"/>

<filter class="solr.SynonymGraphFilterFactory" expand="true" ignoreCase="true" synonyms="synonyms.txt"/>

<filter class="solr.LowerCaseFilterFactory"/>

</analyzer>

</fieldType>

================================================

<analyzer type="index">

<analyzer type="query">

Example

<fieldType name="text_analysis" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.WhitespaceTokenizerFactory"/>

<filter class="solr.AsciiFoldingFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="stopwords.txt" />

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.PorterStemFilterFactory"/>

<filter class="solr.TrimFilterFactory"/>

</analyzer>

</fieldType>

Description

1. WhitespaceTokenizerFactory splits the text stream on whitespace. In the English language, whitespace separates words, and this tokenizer fits well for such text analysis. Had it been an unstructured text containing sentences, a tokenizer that also splits on symbols would have been a better fit, such as for the “Cappuccino.”

2. AsciiFoldingFilterFactory removes the accent as the user query or content might contain it.

3. StopFilterFactory removes the common words in the English language that don’t have much significance in the context and adds to the recall.

4. LowerCaseFilterFactory normalizes the tokens to lowercase, without which the query term mockingbird would not match the term in the movie name.

5. PorterStemFilterFactory converts the terms to their base form without which the tokens kill and kills would have not matched.

6. TrimFilterFactory finally trims the tokens.

Tokenizer Implementations

Common Solr Issues and how to Solve

Indexing Is Slow

Indexing can be slow for many reasons. The following are factors that can improve indexing performance, enabling you to tune your setup accordingly:

Memory: If the memory allocated to the JVM is low, garbage collection will be called more frequently and indexing will be slow.

Indexed fields: The number of indexed field affects the index size, memory requirements, and merge time. Index only the fields that you want to be searchable.

Merge factor: Merging segments is an expensive operation. The higher the merge factor, the faster the indexing.

Commit frequency: The less frequently you commit, the faster indexing will be.

Batch size: The more documents you index in each request, the faster indexing will be

Subscribe to:

Posts (Atom)