1. Data Consolidation: refers to combining data from various sources into a centralized data store that acts as a single source of truth for the organization. Enabling you to store data in a unified store, it can be used for all your reporting and analytics use cases and can serve as a data source for other applications. However, there is some data latency in this data integration method. There will be some time difference between when the data is updated in the original data source and when it gets updated in your central repository.

2. Data Federation: Unlike the data consolidation strategy, where you move all data to a single source of truth, data federation offers a virtual database. Simplifying access for consuming users and front-end applications, this data integration technique performs data abstraction to create a uniform user interface for easy data access and retrieval. Your queries to the federated virtual database are sent to the relevant data source, which then returns the data you requested. This is an on-demand data solution compared to other real-time data integration techniques.

3. Data propagation: uses applications for data transfer from enterprise data warehouses to multiple source data marts on an event driven-basis. As data continues to be updated in the warehouse, the respective data marts are updated synchronously or asynchronously.

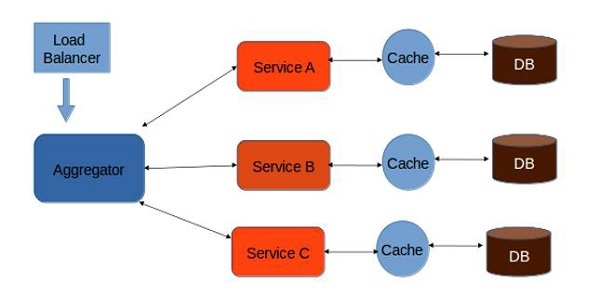

4. Middleware Data Integration: uses a middleware application to transfer data from multiple applications and source systems into a central repository. This approach validates and formats the data before beginning the transfer to the data store, thereby significantly reducing the chances of compromised data integrity or disorganized data. This is especially beneficial for integrating older systems with newer ones, as the middleware can help transform the legacy data into a format that the newer systems can understand.

5. Common storage integration (also called Data Warehousing): Generally referred to as Common Data Storage, data is replicated from the source to a data warehouse. this data integration strategy includes cleansing, formatting, and transforming data before storing it in the data warehouse.

Data integration techniques

1) Change Data Capture (CDC)

What is it? Change Data Capture (CDC) is a data replication technique that makes a copy of the source data into your data storage.

Pros:

Faster data replication. CDC is optimized for streamlining data replication. It only moves the data that has been altered (added, deleted, updated) since the last data integration. Hence saving you networking costs during data movement and speeding up the overall data replication process.

Event-driven. CDC can be configured to fire at every source data change event. Making it a great ally for keeping data consistent between your source systems and data storage.

Cons:

Limited to SQL sources. CDC is primarily designed to replicate data from SQL databases and data warehouses. It’s hard to generalize it to other data sources.

No data transformation. CDC does one thing (data replication) and it does that one thing well. But it cannot be used to sanitize and cleanse data, or to provide more complex data transformation (e.g., prepare data for data analysis).

Best practice:

This data integration method is especially suited for big data sources, where the size of the data is a limiting factor for your integration operations. CDC analyzes the binary log to determine events that changed the data since the last replication and extracts the new rows during replication for a lightweight, fast, and zero-maintenance data replication.

2) ETL

What is it?

ETL is a data integration approach that develops specific and advanced techniques for each stage of this data integration process (extract, transform, load).

Pros:

Customizable data extractors. ETL is not limited to any data source. From applications to SQL databases, ETL can integrate data from (theoretically) any source. Customizable data transformations. ETL tools are the most common leaders in the data transformation space. Offering solutions for advanced data transformations (aggregations, complex SQL or Python queries, machine learning filters, etc.), that are not often present in simple data integration platforms. Customizable data storage. Unlike common integration storage (data warehousing), ETL tools can integrate data into one or more different data storages: from data lakes for unstructured data to BI tools directly for reverse ETL.

Cons:

No data consolidation guarantee. Because ETL tools offer more customizability - the freedom to specify the source, transformations, and destinations yourself - there is no predefined data model or unified data view. To guarantee data quality, you’ll have to impose data management and governance rules alongside this technique.

Greater integration latency. Unlike CDC or middleware data integration, ETL paradigms suffer from the same latency as common data integration storage. The data transformation layer introduces latencies that make them poor candidates for real-time data integration.

Best practice: ETL tools often offer all the functionalities of common integration storage. Pick this integration paradigm if you envision your data model changing and adapting to your business needs. It is easier to adapt ETL integrations to a data warehouse than the other way around. For example, ETL is designed with plug-and-play components, that can easily be swapped and customized, empowering you to pick the best-performing architecture for your data integration needs.